Future-Proof Your Workforce With Smart HR Tools

HRForecast provides you with Big Data insights & services to make better workforce & business decisions.

Find out how Siemens has benefited from our services

Learn more

50%

50%

of the DAX 30 companies work with us

Learn more

Use cases

Answer to your HR questions.

Use cases

Answer to your HR questions.

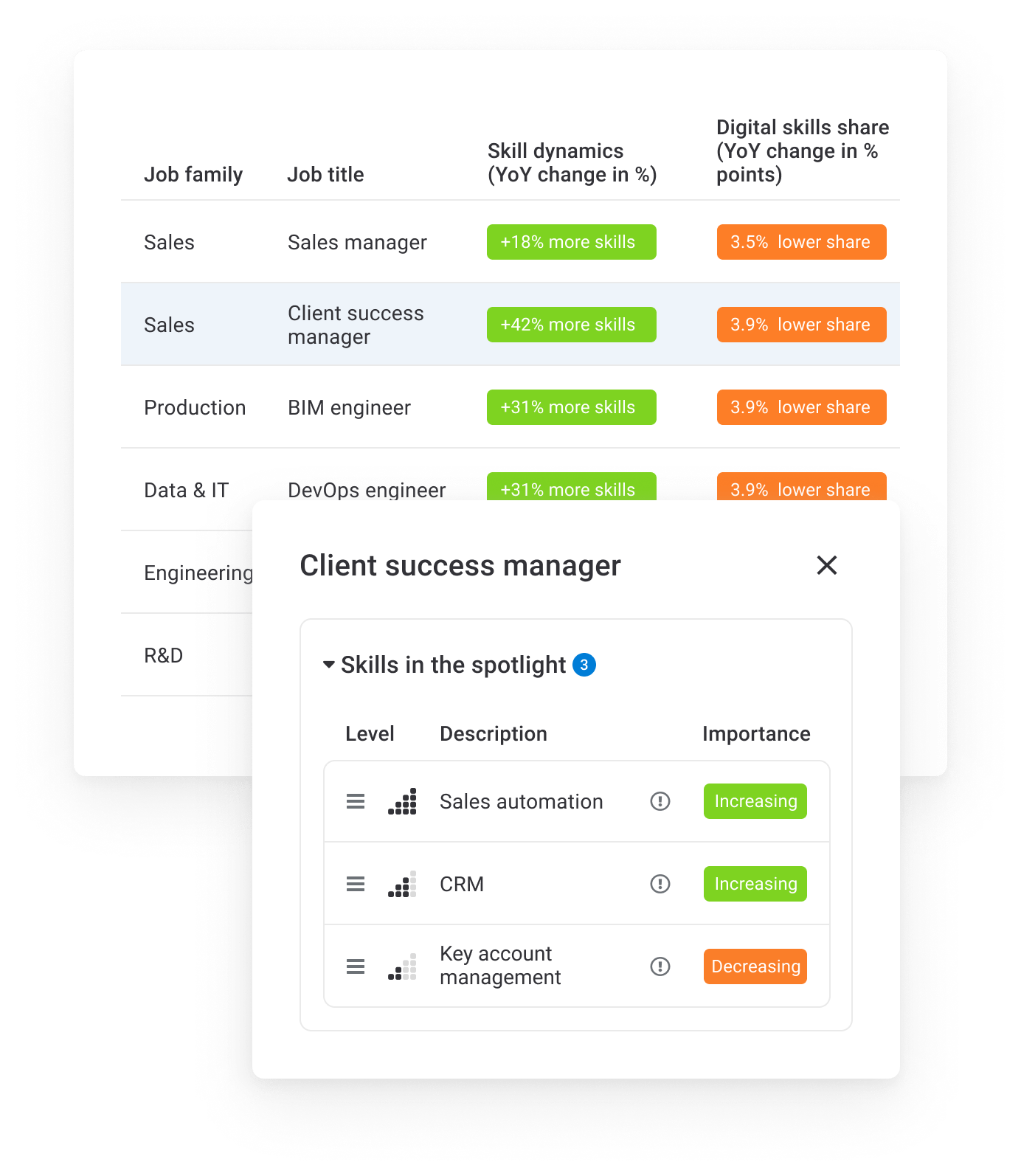

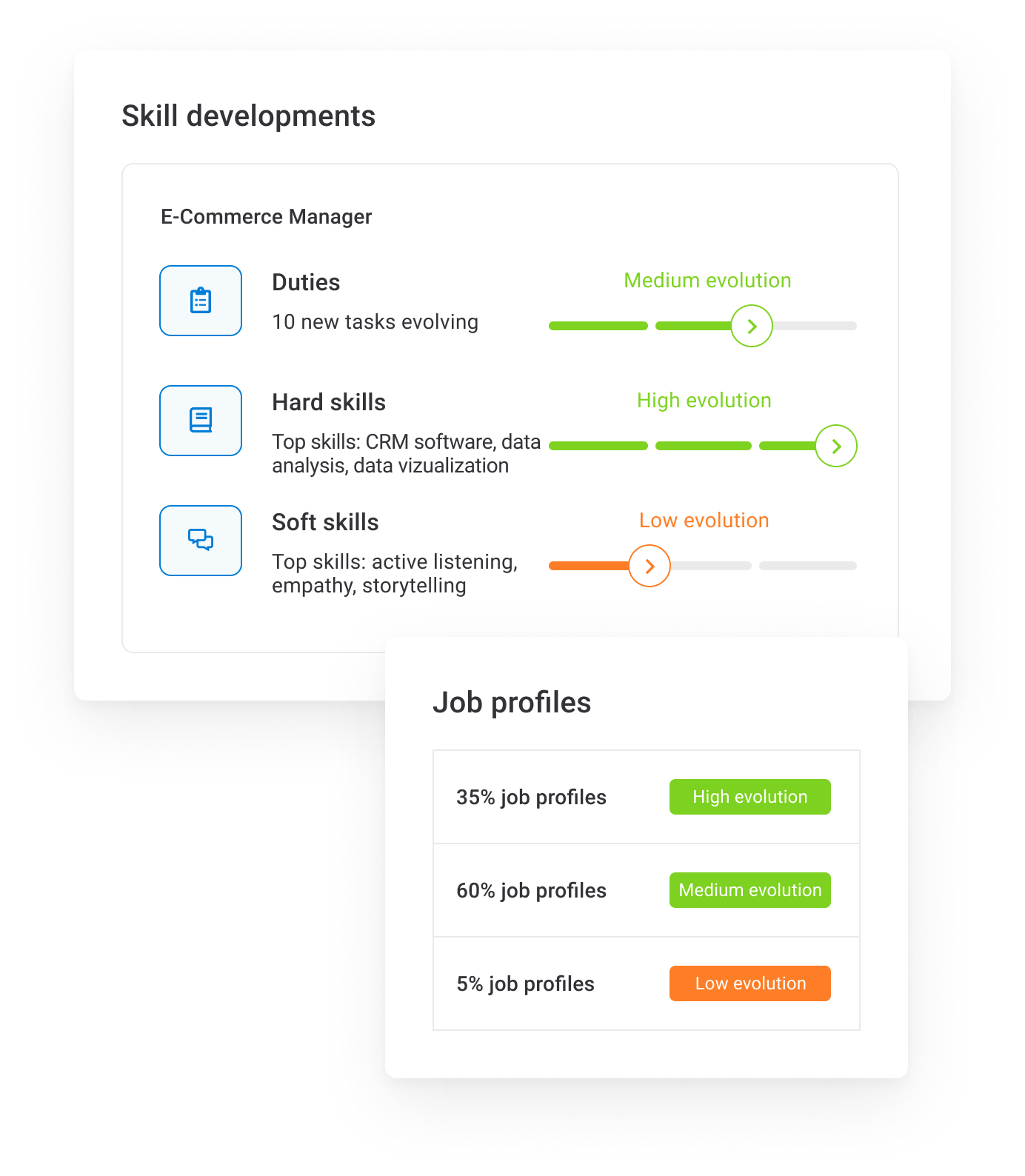

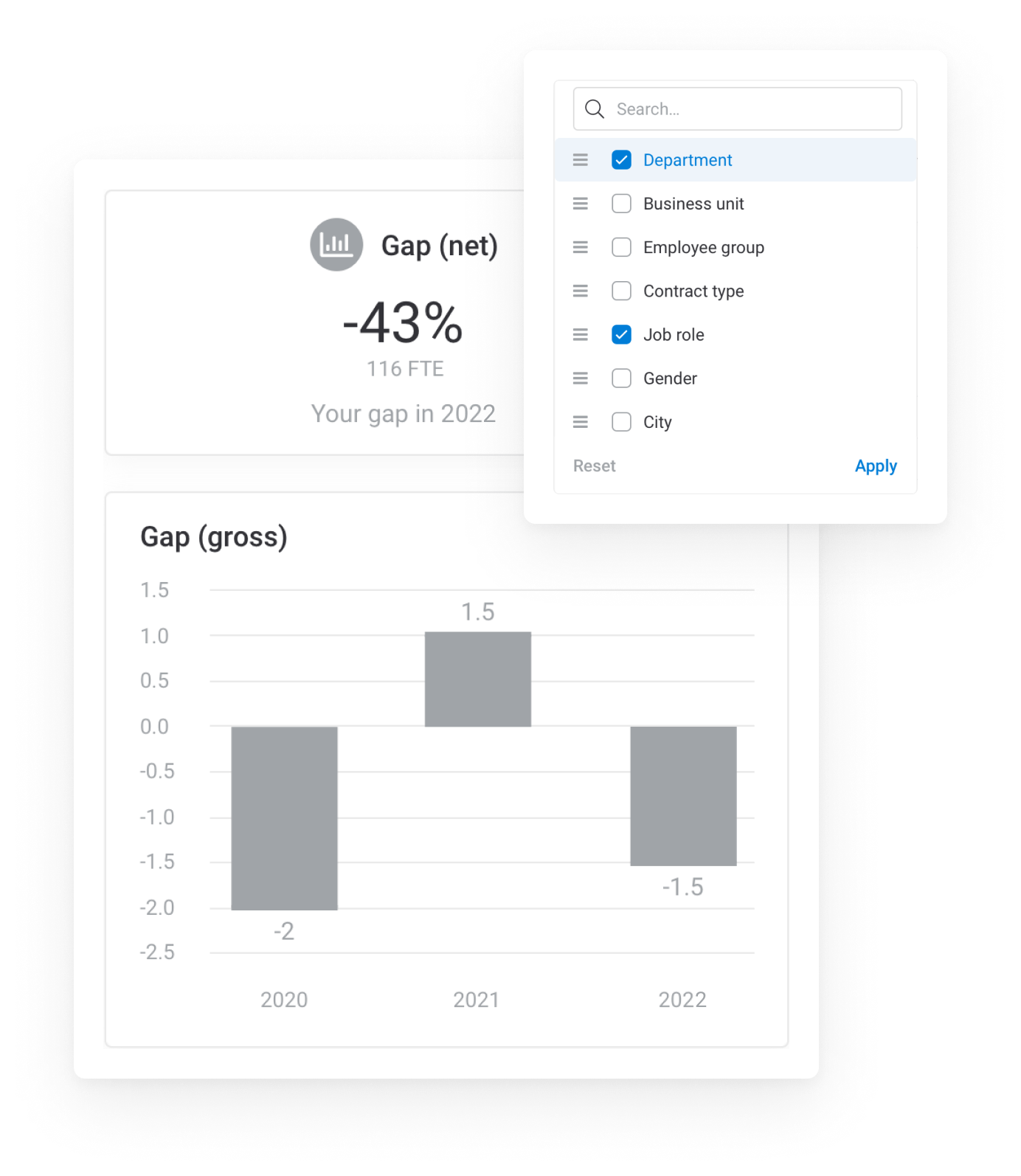

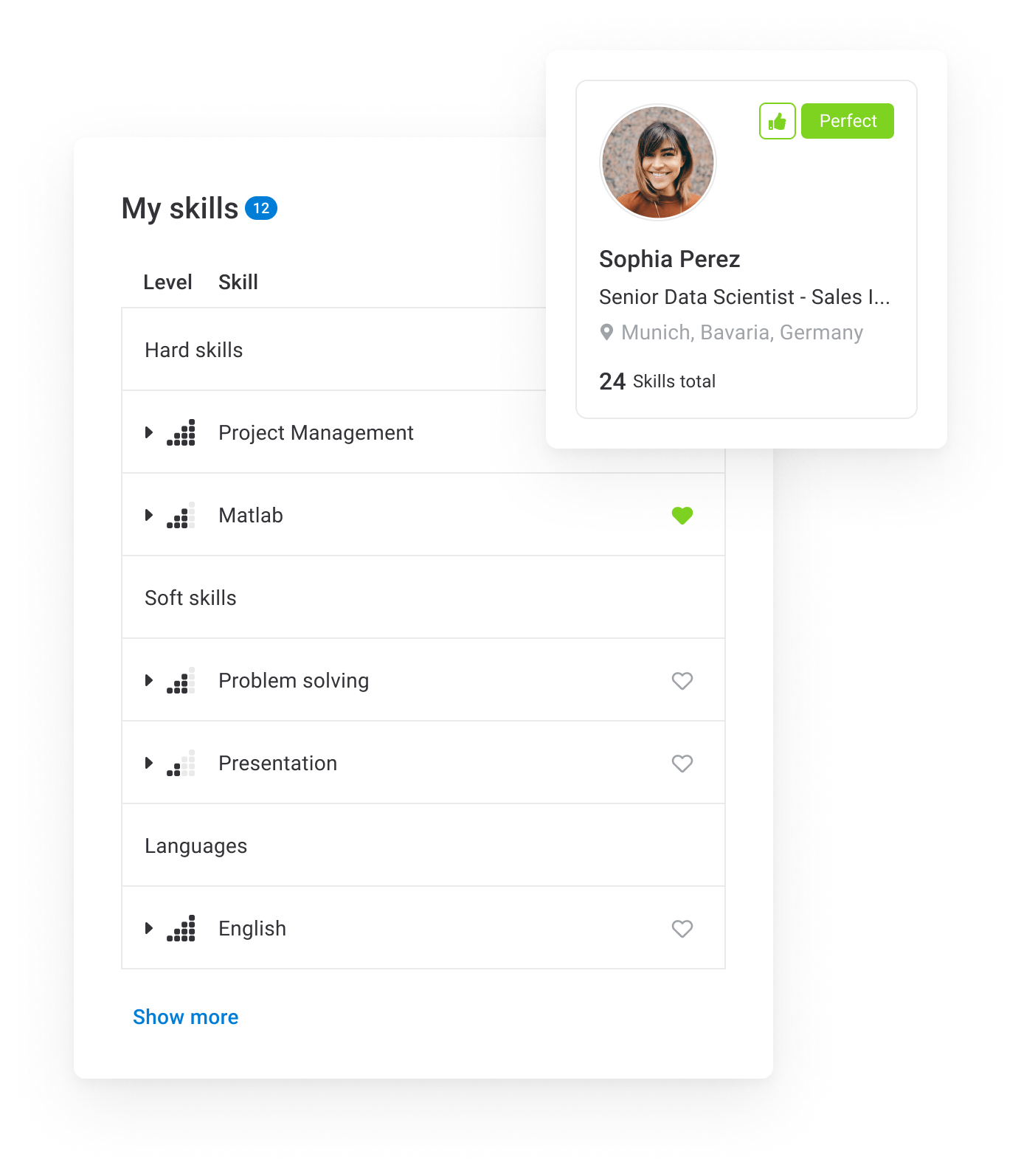

smartData

Market Intelligence

Access to the world’s largest labor market database to tune your business and HR.

smartData

Market Intelligence

Access to the world’s largest labor market database to tune your business and HR.

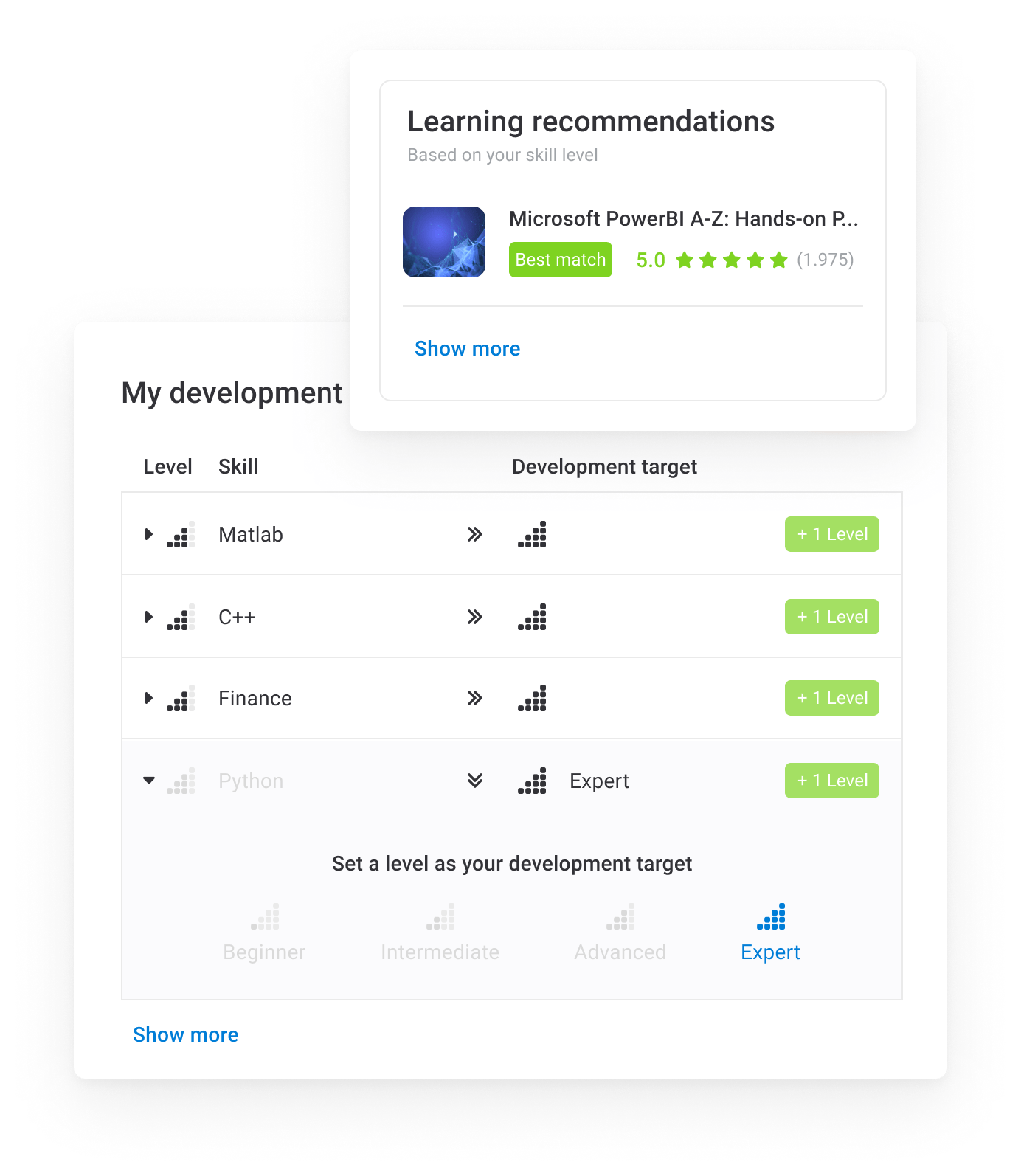

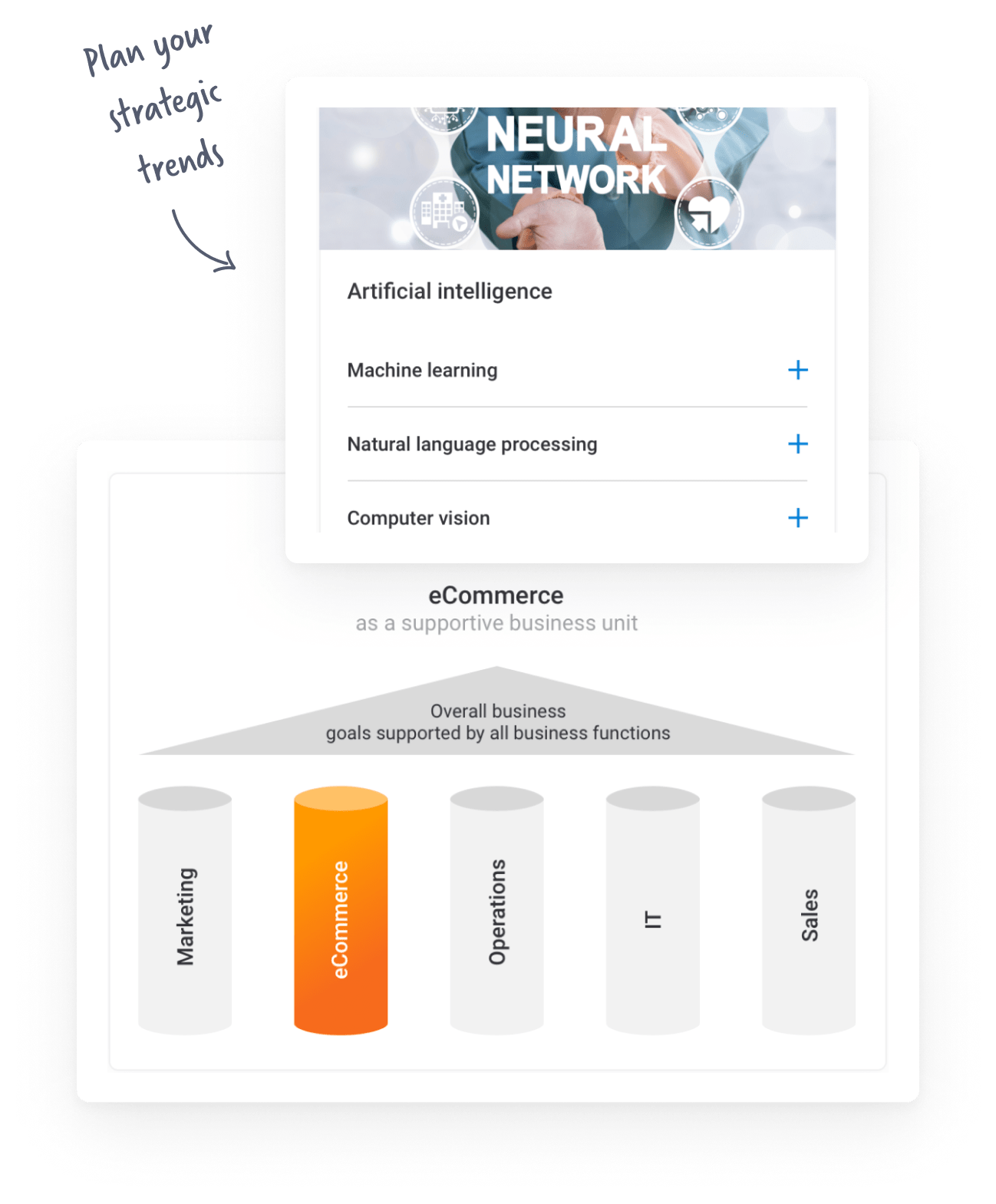

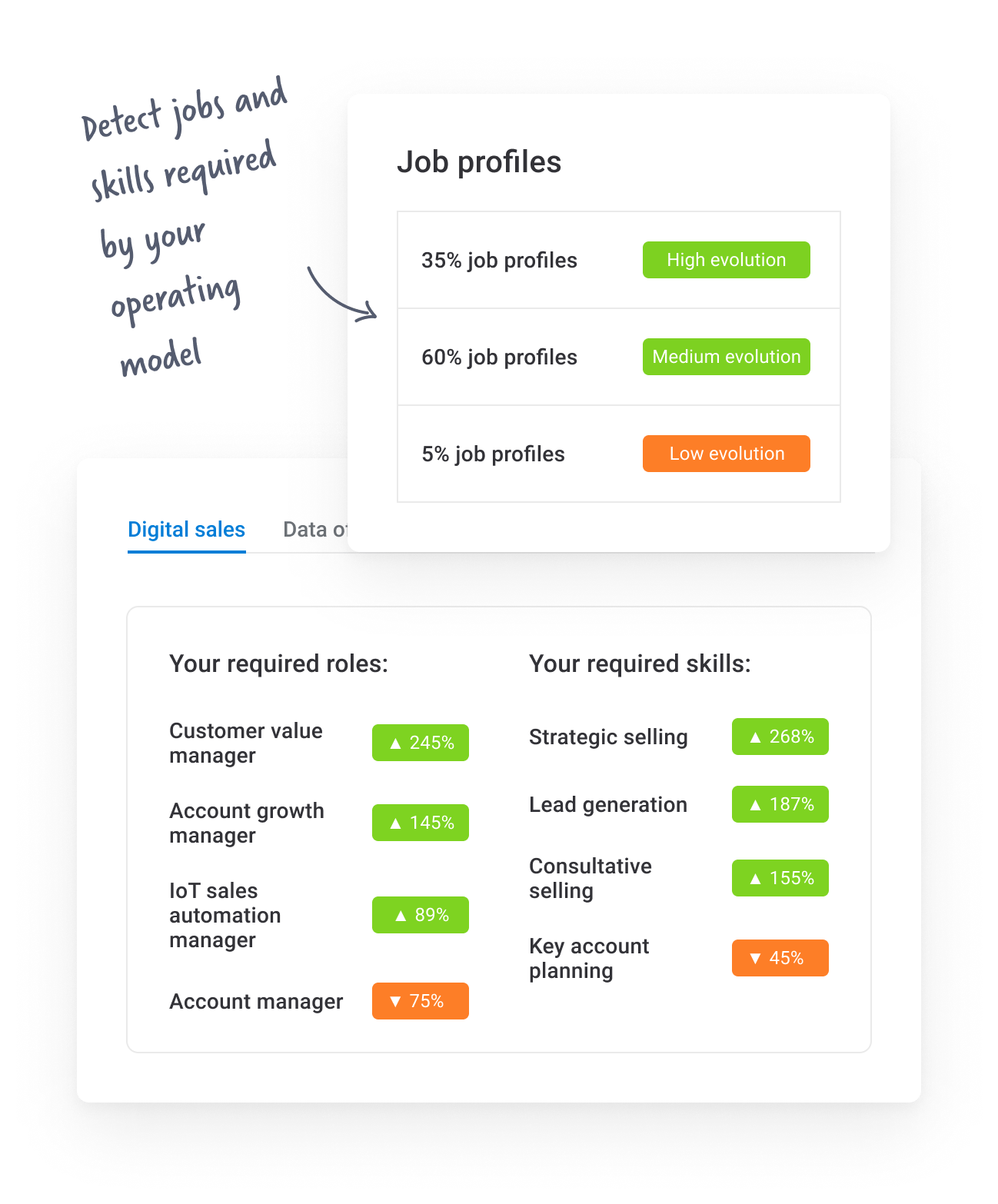

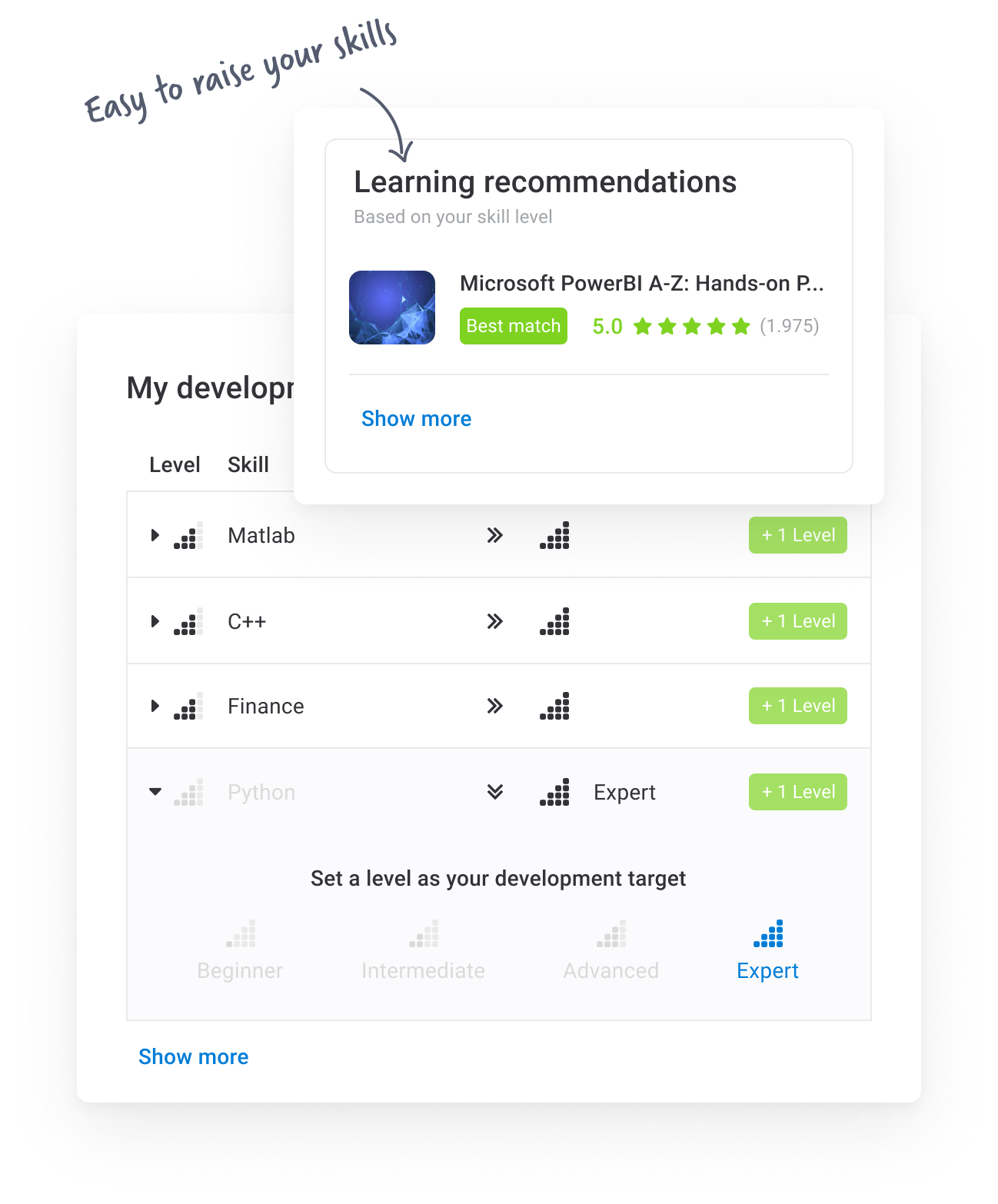

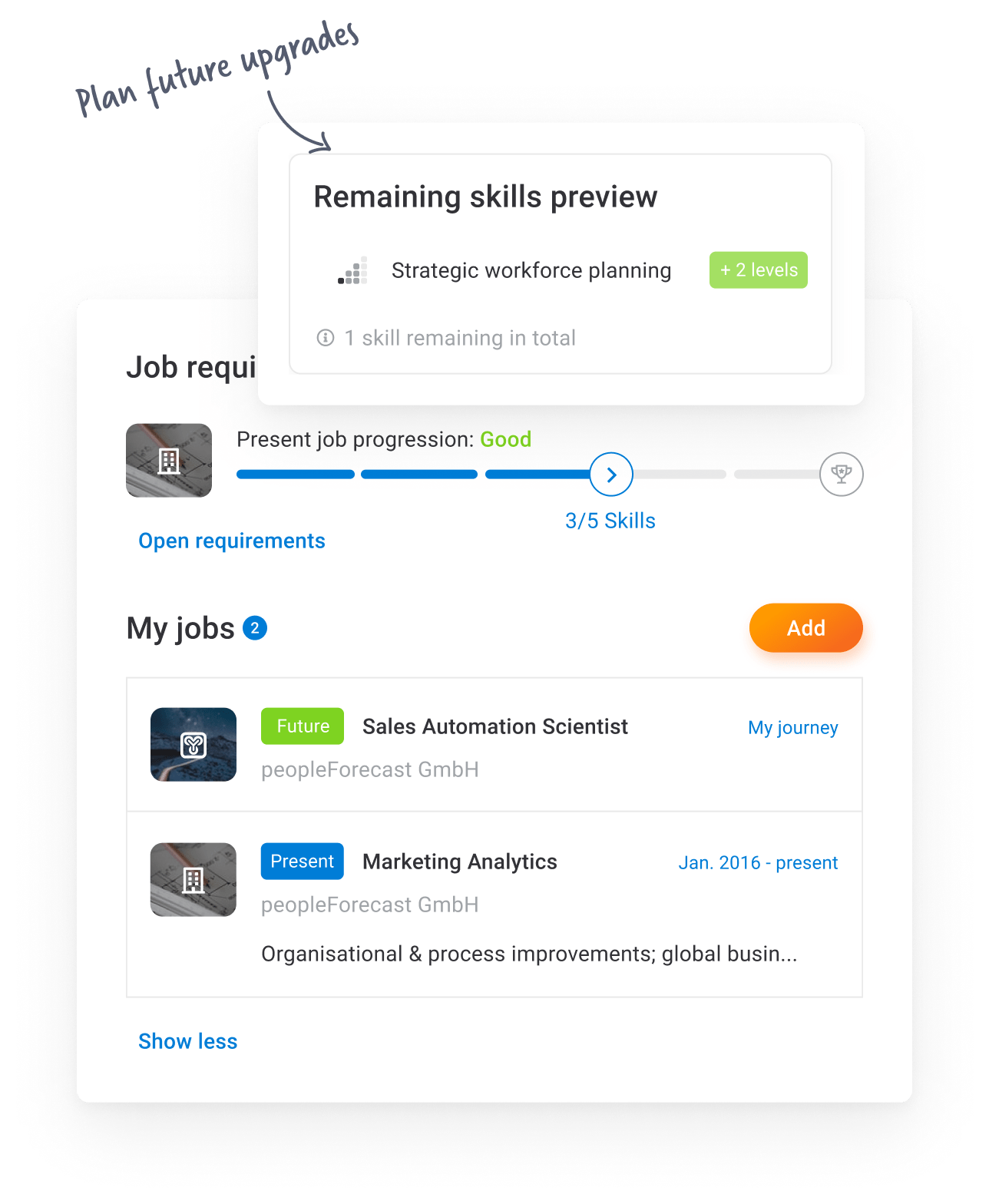

A skill-based organization approach

A skill-based organization approach

Content intelligence insights, tips, and solutions

Content intelligence insights, tips, and solutions

Use cases you can cover with HRForecast

Use cases you can cover with HRForecast

What is digitalization?

What is digitalization?

Talk with Partha Neog

Talk with Partha Neog

Business restructuring in time of COVID-19

Business restructuring in time of COVID-19

How to transform the HR with future workforce insights

How to transform the HR with future workforce insights

Building a future-proof workforce: what you need to know

Building a future-proof workforce: what you need to know

Plan the future of HR: your future workforce and talent demands

Plan the future of HR: your future workforce and talent demands

Future of people analytics

Future of people analytics

Future job roles & skills in Norwegian construction

Future job roles & skills in Norwegian construction

Talent acquisition dialogue

Talent acquisition dialogue

Future Skills Report Chemie 2.0

Future Skills Report Chemie 2.0

Navigating the Impact of AI: Empowering the Workforce for Tomorrow

Navigating the Impact of AI: Empowering the Workforce for Tomorrow

Invite us to your event

Invite us to your event

See why 100+ companies choose HRForecast.

Know more

HRForecast provides you with Big Data insights & services to make better workforce & business decisions.

We help you build a skilled and future-proof workforce through data-driven insights and SaaS solutions.

See why more than 100 companies choose HRForecast for people analytics.

successfully completed projects

of the DAX 30 companies work with us

with state-of-the-art technologies like big data and AI to deliver robust and scalable software solutions

We are stated as one of the fastest growing technology companies in Germany

We are listed by Bersin among the top people analytics providers globally, leading in Europe

We have individual instances for each client to provide maximum security and protection

Our solutions are designed for the fast implementation and are ready to use within a few weeks

We deliver fully customizable solutions that solve your tasks and are easy to integrate with your existing software

Do you know which half it is within your company?

Learn how companies make data-driven workforce decisions with HRForecast.

“HRForecast’s data analytics driven approach delivers tangible value and insights to inform and shape Senior Business and HR leadership strategic workforce planning decisions.”

Alexis Saussinan, Global Head of People Analytics & SWP, Merck Group

View case

“I was impressed by the ease with which the Big Data concept of HRForecast provided transparency, benchmark information and therefore a target-oriented analysis of skills.”

Michael Herrmann, Managing Director, Lufthansa Systems Hungary

View case

“With HRForecast we were able to define necessary skill profiles and training recommendations in an automated way. We were able to better concentrate on alignment with experts as well as on a program guiding.”

Thomas Berthold, Head of Expert Qualification Management, Deutsche Telekom AG

View case

“We conducted our first pilot with HRForecast thinking big data had potential, however we were amazed at the transparency and insights it delivered. Since then we have conducted several projects with HRForecast.”

Dr. Ariane Reinhart, Member of Executive Board, HR

View case

Join our HR community on LinkedIn with our daily updates, industry news, and engaging conversations.

Our articles are your source of inspiration and useful content. Never miss a thing with our timely emails.

info@hrforecast.de

info@hrforecast.de

recruiting@hrforecast.de

recruiting@hrforecast.de

2023 © Copyright - HRForecast | Imprint | Privacy policy | Terms and conditions (MSA)